As applications increased in complexity along with an accompanying need to scale, the industry adopted the microservices approach to software architecture.

The partitioning of a system into standalone intercommunicating services brought with it the inherent flexibility of loose coupling, as well as a host of complexities.

Not least of which was data synchronisation.

Typically employing message queues and event sourcing, the administration and monitoring of this architecture was non trivial. So were the automated deployment strategies it necessitated.

I often think back to Martin Fowler’s sage write-up on microservices adoption and wonder how many small scale greenfield projects are panning out today.

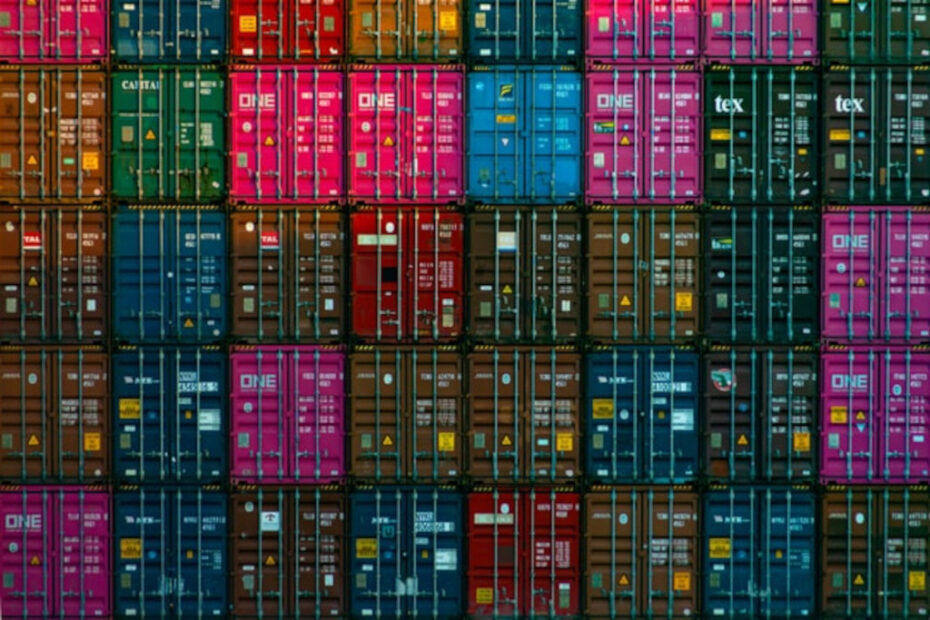

As we entered the world of Cloud Native and 12Factor applications, we saw how software container technologies sat naturally with SOA deployments, and no-one could dispute the operational efficiencies that these self load-balancing, healing, and deploying platforms brought to the fore.

I had a sense at times, though, that some of these technology stacks and methodologies were adopted for their novelty factor, often leaving teams down the line with what I imagine to be technical debt in their architectures.

Notwithstanding that microservices have solved important problems for some very large companies and their projects.

When not to use containers

There is a widespread misconception that application containers simplify developer workflows and deployment strategies. They don’t.

In fact, they add complexity to these processes as a tradeoff for implementing microservices.

They must provide the necessary architectural infrastructure to run microservices in production environments, including such components as service discovery, monitoring, high availability, load balancing, secrets and other concerns. At scale.

They also necessitate image repositories, while introducing new challenges around image transfer sizes between deployment cycles.

They complicate developer experiences – as they work out how to incorporate a container based workflow on their local machines – as opposed to simplifying them as is commonly claimed.

While there are some inherent benefits to using containers such as versioning, dependency encapsulation and consistent deployments, these advantages are few in relation to the added complexity overall.

And while traditional CI/CD pipelines are still perfectly viable for most new projects, the choice instead of a container and microservices approach has even spawned an entire ecosystem of providers offering build time and server resources as services.

Container based projects not only take longer to deploy, but also consume more hardware resources, and have given rise to dedicated job portfolios such as container-focused DevOps engineers.

Added to this is a fundamental error at the onset; i.e. whether to use application containers at all.

The main use case for container technology is microservices. If you are not implementing microservices then don’t use containers.

As stated earlier, containers simplify microservices in production and provide the architectural components necessary for the architecture to work when involving anything up to thousands of cooperating services.

I estimate that about 80% of projects are better suited to traditional architectures, given that microservices emerged from an organisational problem where very large projects had different departments responsible for parts of the project under development.

Compounding the issue is the almost ubiquitous adoption of complex container orchestration platforms based on Kubernetes instead of something simpler like Docker Swarm – depending of course on the system’s requirements.

Summary

As always, the right tool for the job is as true in software architecture as anywhere else.